Introduction

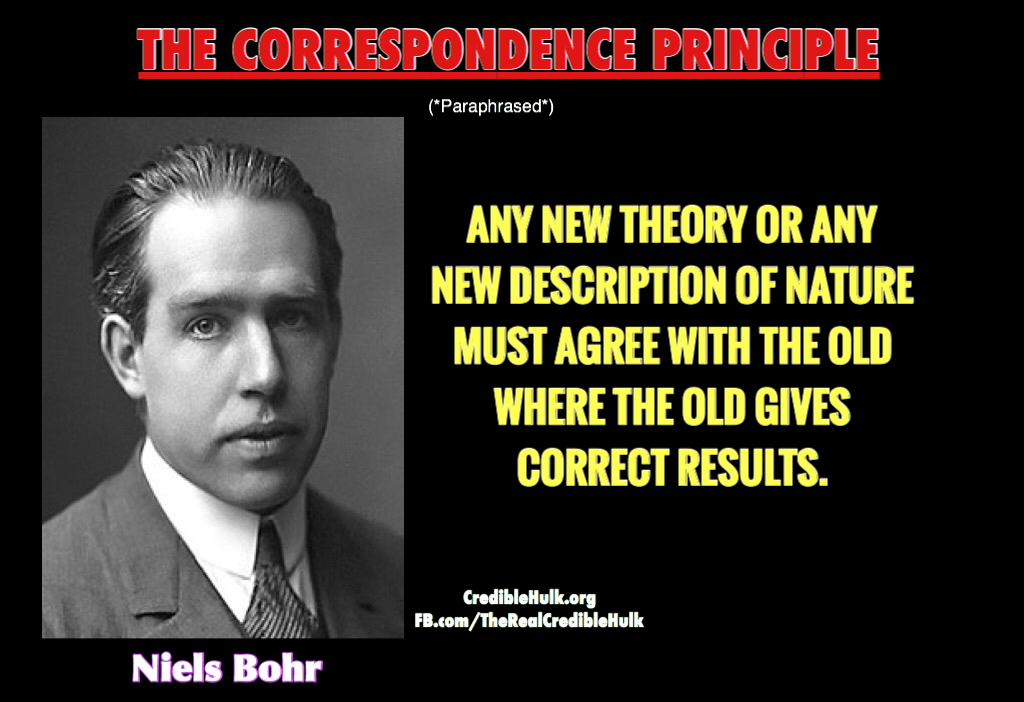

One of the intrinsic features of the scientific process is that it leads to modifications to previously accepted knowledge over time. Those modifications come in many forms. They may involve simply tacking on new discoveries to an existing body of accepted knowledge without really contradicting prevailing theoretical frameworks. They may necessitate making subtle refinements or adjustments to existing theories to account for newer data. They may involve the reformulation of the way in which certain things are categorized within a particular field so that the groupings make more sense logically, and/or are more practical to use. In rare cases, scientific theories are replaced entirely and new data can even lead to an overhaul of the entire conceptual framework in terms of which work within a particular discipline is performed. In his famous book, The Structure of Scientific Revolutions, physicist, historian, and philosopher of science, Thomas Kuhn referred to such an event as a “paradigm shift.” [1],[2]. This tendency is a result of efforts to accommodate new information and cultivate as accurate a representation of the world as possible. (more…)